Frontiers How computational biology is making us smarter

The Salk Institute is embracing the artificial intelligence revolution and inventing new ways to investigate life.

Salk Professor Terrence Sejnowski wrote the book on artificial intelligence—literally. The author of The Deep Learning Revolution, Sejnowski could not be more excited about how AI will revolutionize research and usher in a new scientific revolution.

“We’re seeing these advances everywhere,” says Sejnowski, who heads the Computational Neurobiology Lab, directs the Crick-Jacobs Center for Theoretical and Computational Biology and holds the Francis Crick Chair. “It wasn’t long ago that people had great difficulty talking to machines, but now cell phones can actually understand our speech.

The changes are even more profound in biology. It’s just unprecedented.”

Machine learning, deep learning and other AI techniques are being used to probe massive data sets, identify useful information and make accurate predictions. “Unprecedented” may be selling the technology short—machine learning is accomplishing the impossible.

Consider the protein folding problem. A protein’s 3D shape dictates its function, but the molecules are far too small to see clearly, even with electron microscopes. Scientists use crystallography and other sophisticated imaging methods to infer protein shapes, but that can take years.

Theoretically, computers should be able to calculate a protein’s 3D shape based on its amino acid sequence, but the computational demands are enormous.

“Everyone said the problem was so difficult, we might never solve it,” says Sejnowski. “If you calculated the required computing, it would take the lifetime of the universe.”

Yet in late 2020, Google sibling company DeepMind solved the problem—for thousands of proteins. In 2021, DeepMind published the structures for every protein in the human body. These accomplishments, once thought unreachable, are only the beginning.”

The Salk Institute has long been at the forefront of computational biology and is doubling down to harness information theory and help solve some of biology’s most challenging problems. Through Salk’s Campaign for the Future, the Institute is recruiting computer scientists and mathematicians, expanding its computational biology infrastructure and building a 100,000-square-foot Science and Technology Center to catalyze interdisciplinary collaboration.

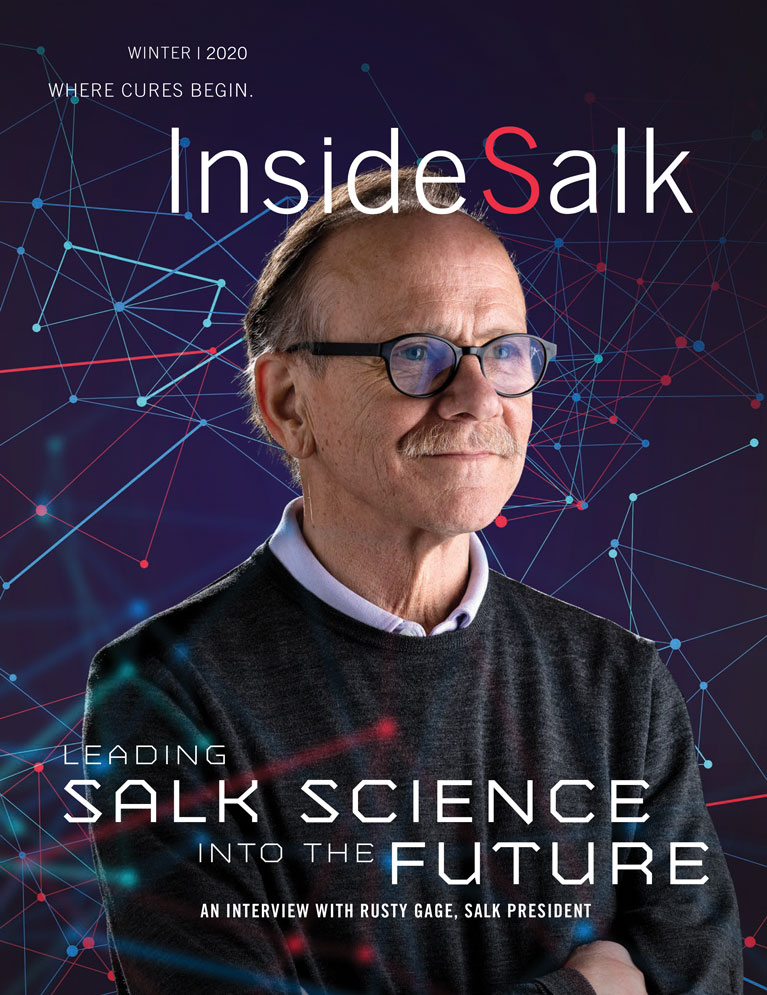

“In the past, we didn’t have the instrumentation to generate all the data we needed,” says Salk President Rusty Gage, Vi and John Adler Chair for Research on Age-Related Neurodegenerative Disease. “That’s not a problem anymore; scientists generate enormous amounts of data. The problem now is analysis and theory building. How do we reduce the enormous data sets to manageable chunks of information that can then be put together in meaningful ways, so we can formulate a new way to look at life? That’s why information theory is so critical.”

“We’re living in a new world and have to completely reassess both how we do things and what we are even capable of doing.”

– Terrence Sejnowski

The Lifetime of the Universe

Protein configurations are only one challenging data set machine learning is unraveling. Neuron interactions may be even more complex. Sejnowski is working with Uri Manor, assistant research professor and director of Salk’s Waitt Advanced Biophotonics Core, and others to generate a global picture of how neurons function in large groups.

The team wants to investigate how synapses on the same dendrite (treelike neuronal structures that receive signals) interact with each other and contribute to larger neural circuits.

Sejnowski calls it “anatomy on steroids.” Traditionally, researchers would trace the connections from a single cell, which could take weeks—and there are a hundred billion neurons in the brain. To complicate matters, the data often comes from extremely thin tissue slices imaged on electron microscopes. Researchers want to connect those slices to see the larger picture, but traditional methods won’t cut it.

“That’s all changed,” says Sejnowski. “Over the last five years, deep learning has made it possible to automatically trace these neurons in a stack of electron microscope sections.”

Researchers have gone from understanding single neurons to reconstructing every neuron in a cubic millimeter of tissue: a hundred thousand neurons with a billion synapses. Now, scientists can start to see how cells are interconnected and model the ways information flows between neurons in different parts of the brain.

“That’s what this revolution is all about,” says Sejnowski. “We’re living in a new world and have to completely reassess both how we do things and what we are even capable of doing.”

“We are using information theory to understand how the nervous system identifies good solutions.”

– Tatyana Sharpee

Information about Information

The sense of smell is one of our first defenses against digesting spoiled food. For animals, it also plays an important role in identifying predators and members of their own species.

From an evolutionary perspective, smell is one of the oldest senses, but it’s the one we know the least about.

Tatyana Sharpee, professor in the Computational Neurobiology Laboratory and holder of the Edwin K. Hunter Chair, is working to understand how smell and other senses are processed in the brain. Sharpee’s lab uses mathematical models, algorithms and a variety of laboratory methods to determine how sensory information moves through the brain and influences decision-making.

This two-way process—information comes in, decisions go out—creates a comprehensive rubric to study vision, hearing, touch and smell. She is particularly interested in how the body “invests” in particular senses in proportion to their survival relevance.

As a postdoctoral fellow at the University of California San Francisco, Sharpee developed an approach called “maximally informative dimensions,” which helps neuroscientists identify neural mechanisms that optimize how organisms assess information.

“We are using information theory to understand how the nervous system identifies good solutions,” says Sharpee.

In recent work, the lab redefined how inhibitory neurons improve information processing. Much like stoplights on a busy road, these cells restrain traffic (information)—but in a good way. By modulating signals, inhibitory neurons literally prevent sensory overload.

But Sharpee found their role goes beyond that. When signals from other parts of the brain are delivered to local circuits through inhibitory neurons, this optimizes information flow. This research focused on retinal pathways but could be applied to other sensory inputs.

“By targeting only the most sparsely responding neurons, inhibitory neurons make it possible for the whole circuit to function well,” says Sharpee.

In real life, this mechanism helps organisms focus on important signals and events. In other words, inhibition helps the brain prioritize the most immediately relevant signals.

Assessing information is key to survival for humans, microbes and everything in between. Tiny C. elegans worms maximize foraging information by searching small areas before shifting to larger regions. This approach appears random, but Sharpee’s lab devised an algorithm that made strategic sense of these worm norms.

This feeds into a larger understanding of information, specifically its costs and rewards. C. elegans pay a metabolic price to gain information about food distribution, but the data ultimately improves their ability to find more fuel.

“The data guide their behavior by trying to maximize information, not the direct payout in terms of the molecules they seek,” says Sharpee. “They are more guided by the information about the distribution.”

Joseph Ecker, professor and director of the Genomic Analysis Laboratory and Howard Hughes Medical Institute investigator, along with Associate Research Professor Margarita Behrens, is taking a more macro view of brain architecture.

Big-Picture Brain Studies

Joseph Ecker, professor and director of the Genomic Analysis Laboratory and Howard Hughes Medical Institute investigator, along with Associate Research Professor Margarita Behrens, is taking a more macro view of brain architecture.

They are collaborating with the Chan Zuckerberg Initiative to expand the Human Cell Atlas, which is a map of every cell type in the body, and have a major NIH grant to build a genomic/epigenomic reference map for the human brain.

“You have two ends of the spectrum here,” says Ecker, who holds the Salk International Council Chair in Genetics. “The Chan Zuckerberg Initiative is looking at how much variation there is between people of different ages and genders. The NIH grant supports mapping the brain in such detail that we have a reference for future work.”

These projects are the latest efforts by Ecker and Behrens to help comprehend the brain’s complexity. The genomics part is relatively easy: All cells have pretty much the same DNA. But epigenomics, the regulatory layer that helps determine which genes get expressed (go to work) and which ones remain silent, is a different story.

These elements can shift based on cell type, function, brain location, age and other factors. Tallying a person’s gene complement is a start, but to truly understand brain function, we need to know which ones are on the job.

The Chan Zuckerberg Initiative work dovetails with Salk’s efforts to improve life span health. The team will focus on human brain diversity: How does neural gene expression differ between men and women; for people between 25 and 80; and even in different regions of one person’s brain?

“This is a little like the early days after the Human Genome Project,” says Behrens. “We had a genome from one person, but we didn’t know much about the variability between people. Here, we’re taking a deep look at gene expression and comparing it across individuals. We want to understand the ground truth for certain types of neurons.”

Ecker, Behrens and colleagues have been awarded over $35 million to study how genes are controlled in different parts of mouse and human brains. This pilot project could expand geometrically, as the NIH has requested proposals for the Brain Initiative Cell Atlas Network, which will support a nationwide effort to comprehensively study brain architecture. (See this issue’s Discoveries.)

These will be some of the most complicated investigations humans have ever attempted. Researchers will study brain genetics, epigenetics and spatial and temporal relationships between cells—research that will produce truly epic amounts of information.

“We’re trying to figure out how we’re going to address all that data,” says Ecker. “Not only analyzing, but storing it, moving it around and collaboratively studying it in different locations. Right now, we’re using a supercomputer for mapping, but even that takes several days to complete the epigenetic profile of a single neuronal cell type.”

Ecker and Behrens are confident machine learning and other computational approaches will help solve this data deluge. New algorithms are already emerging that could accelerate analysis four-fold. Other methods will be needed to scale from 80-million neuron studies to 80-billion. Ecker notes this is already happening, as research groups worldwide are developing methods that accelerate supercomputers, assess complex images and automate many necessary tasks.

“We will know the composition of the human brain in enormous detail,” says Behrens. “The payoff will be the brain feels less like a black box. We won’t know everything, but we will know much more about each neuronal type. Perhaps, in the long run, we’ll learn how to target specific types to help people with neurological diseases.”

“We make these incredibly detailed spatial maps, and now the real challenge is to make sense of what they mean.”

– Pallav Kosuri

Where Engineering and Computation Meet

You’ve seen the pictures: microscopic cellular components lit up with fluorescent proteins. These images are beautiful and informative, but they miss a critical trait—motion.

“There is not a single biological component that does not move,” says Pallav Kosuri, assistant professor in the Integrative Biology Laboratory. “But the vast majority of that universe of movements has never been seen, much less studied.”

Kosuri began his efforts to capture molecular motion when he was a postdoctoral researcher but was hamstrung by his tools. One example is an atomic force microscope, a large machine that uses a needle, much like a phonograph, to scan the surfaces of cells and molecules.

“The whole time I was using it, I kept thinking, why did we build this huge machine to see a single molecule?” says Kosuri. “We should build small machines to see single molecules.”

The solution was an approach called DNA origami, first conceived in the 1980s. Creating synthetic structures from DNA relies on the molecule’s intrinsic base-pairing properties: adenine (A) binds to thymine (T); cytosine (C) binds to guanine (G).

But other combinations of bases, such as A and G or C and T, don’t like to bind. Armed with these simple rules, scientists can now design DNA in precise patterns, forcing the molecules into unique folded shapes (origami). These, in turn, can help visualize individual molecules in motion.

Kosuri uses this technique to add DNA arms to molecules and measure their rotation. Consider the letter “I.” If you look down vertically, at its cap, “I” looks like a dot. Is it spinning? Who can tell? But a “T” adds an arm, making it easier to track motion.

“Now we can study these rotational movements,” says Kosuri, “and there are countless reactions that have never been investigated that we can start to really see and understand.”

These capabilities can help researchers visualize how DNA is unwound when being read by a cell, or how CRISPR gene-editing machinery searches for target sequences in the genome.

Computational methods play an integral role with DNA origami—designing the origami constructs, simulating how they will behave, analyzing measurements and generalizing the findings.

Kosuri’s lab is also developing new ways to study heart failure. Using patient tissue samples, his team is mapping every cell’s molecular profile and location. These studies provide incredible insights into how heart disease affects individual molecules and cells, but not surprisingly, the data can be overwhelming.

“We make these incredibly detailed spatial maps, and now the real challenge is to make sense of what they mean,” says Kosuri. “We’re seeing which genes are turned on and off in each cell, the exact shapes of these cells, their locations in three-dimensional space, which cells are next to each other and which genes are active in those cells, and so on. Now, we’re working with computational biologists to develop new methods to make sense of it all. If we succeed, we might be able to catch earlier signs of heart disease and figure out how to keep heart cells healthy in the face of aging.”

“We focus on dexterous behaviors because they are some of the least understood, and they’re fundamental to our interactions with the world.”

– Eiman Azim

People in Motion

Eiman Azim is trying to figure out how people move, a surprisingly complex problem. As assistant professor in the Molecular Neurobiology Laboratory and William Scandling Developmental Chair, Azim is studying brain outputs, the corresponding feedback, and how the nervous system makes adjustments. Even understanding how the brain determines a limb’s positioning—a multisensory activity called proprioception— requires tremendous scientific bandwidth.

We focus on dexterous behaviors because they are some of the least understood, and they’re fundamental to our interactions with the world,” says Azim. “A lot of these movements, like buttoning your shirt or typing a text, are susceptible to disease and injury. If we can understand how these movements are controlled, we’ll be in a better place to repair them when they go wrong.”

The questions come in layers, and those layers have their own layers. For example, what does healthy movement even look like? Azim is combining machine learning, computer vision and engineering to find those answers.

However, training neural networks to recognize different parts of the body in 3D space is complicated. Azim needs training data—observations that teach the neural network. Traditionally, people have supplied this data, but the pace is slow, the data prone to human error and the results hard to generalize.

“If you train a subset of your data, it works well on the rest of the data acquired in that environment, with the same lighting conditions and camera angles,” says Azim. “But if you change anything—the lighting, the room you’re in—you have to start over.”

To optimize the process, Azim’s lab has been working with engineers at UC San Diego’s Qualcomm Institute (QI), developing an experimental rig, called GlowTrack, which combines fluorescence (to mark body parts), orchestrated strobe lighting and cameras. On a good day, a human researcher might tag a thousand images to train the network. GlowTrack can process millions in the same amount of time.

“By having a much larger training data set, we can dramatically expand how the network generalizes,” says Azim. “Different experimenters can use different setups without doing any new training.”

This refinement did not happen overnight. Azim worked for months with his QI collaborators—designing, testing, redesigning, retesting. That collaboration worked well, but Azim and others believe these capabilities should be in-house at Salk. He and Kenta Asahina, assistant professor in the Molecular Neurobiology Laboratory and holder of the Helen McLoraine Developmental Chair in Neurobiology, are developing an Instrument Design, Engineering and Application (IDEA) Laboratory to make that happen.

“We need engineers on the Salk campus to work with us and develop commercially unavailable solutions to meet our experimental needs,” says Azim. “The IDEA Lab is just a start. Once the Science & Technology Center is built, engineers and biologists will work hand-in-hand, and that will go way beyond building devices to actually pursuing academic bioengineering research with trained engineers.”

Edward Stites is an assistant professor in the Integrative Biology Laboratory and Hearst Foundation Developmental Chair. His lab is heavily focused on computational and mathematical methods.

“Simple” Math

Edward Stites is an assistant professor in the Integrative Biology Laboratory and Hearst Foundation Developmental Chair. His lab is heavily focused on computational and mathematical methods.

“We really enjoy taking a lot of data where we already understand how things fit together, and then we use mathematics to rigorously investigate the system to get new ideas and new hypotheses,” says Stites.

His team is developing new ways to investigate cancer-causing mutations and their responses to treatment, often studying RAS mutations. RAS has been heavily scrutinized for decades, providing abundant data for computational methods.

Recently, the lab used mathematics to identify RAS mutations that indicate its sensitivity to an available drug. As a result, thousands of colon cancer patients now have a better treatment option.

But something about RAS bothered Stites. Everybody in the field believed RAS mutations were found in 33 percent of cancer patients. That’s been the accepted figure for decades, but is it true? Examining the question from 30,000 feet, the numbers didn’t make sense to Stites. Common tumors, like breast and prostate, hardly ever have RAS mutations.

He envisioned a relatively simple side project and gave it to a lab scientist who came back empty-handed. Stites scratched his head and gave it to another researcher. Same result. There was a problem: two data sets that barely spoke to each other.

Stites and his scientists were forced to dig deeper to find the problem. While the data existed for mutation rates in different cancers, and for the percentages of people who contract those specific cancers, the two data sets could not be readily combined.

“The epidemiologists who provide the data for cancer rates in the US use a detailed, thorough naming system to characterize each cancer,” says Stites. “But cancer genome data are far less granular. A study that measures the mutations that cause breast cancer might include 20 or more subtypes of breast cancer, and not provide the details that would allow it to be mapped back to the epidemiological data. The two types of data were incompatible.”

Slowly, they figured out how to make these different data sets talk to each other—and the accepted numbers weren’t even close.

“RAS isn’t in 33 percent of cancers, it’s more like 15 percent,” says Stites. “It’s less than half as abundant as people thought, which is important because labs spend a lot of money studying RAS, mostly because they think it’s so common.”

These efforts to align data sets could have many other applications, such as reducing biases in population data. White men are disproportionately represented in genomic studies, but the lab’s mathematical approaches could mitigate those misperceptions and provide a truer picture of the actual disease burden across populations.

“I look at computational biology like solving a logic problem,” says Stites. “Like playing Clue. You’re trying to solve the crime with as little information as possible. You can keep playing the game until you stumble upon the right answer, or you can get the answer much quicker by combining the data you have in the right way. The problems we solve are harder and more meaningful than a children’s game, but solving them still provides a fun sense of accomplishment, along with the satisfaction of doing work that makes a difference.”

“The things we can accomplish with machine learning are just amazing. It’s like the first electron microscopes or deciphering the structures of DNA—just an incredible time for discovery.”

– Martin Hetzer

Life Span Health

Professor Martin Hetzer believes information theory will help solve many puzzles. He and others are betting this discipline will unlock the secrets of biological resilience.

“We want to understand the circuits that contribute to good health,” says Hetzer, who is Salk’s senior vice president/chief science officer and holds the Jesse and Caryl Philips Foundation Chair. “If we can figure out how they work, we can take measures to modulate them and hopefully better control disease.”

Hetzer and colleagues have been working on the problem for years. Their collaborative 2018 study identified molecular signatures in skin cells that could accurately predict a person’s age. Those findings are continuing to spawn new inquiries.

Discovering age markers was a first step towards intervening and improving health. Cancer, heart disease and dementia are all associated with aging. Perhaps adjusting these age-indicating molecular signatures could reduce a person’s disease risk.

“The study provides a foundation for quantitatively addressing unresolved questions in human aging, such as the rate of aging during times of stress,” says Hetzer, who was co-senior author on the paper.

Machine learning was a critical part of this study, and many more that followed, creating a predictive algorithm that was far more accurate than previous methods. “The things we can accomplish with machine learning are just amazing,” says Hetzer. “It’s like the first electron microscopes or deciphering the structure of DNA—just an incredible time for discovery.”

Incorporating sophisticated computational approaches will continue to accelerate Salk’s ability to answer some of the most challenging scientific questions and analyze massive amounts of raw data from cell and molecular biologists, neuroscientists, plant scientists and many others.

“We have this incredible computational toolset that did not even exist just a few years ago,” says Hetzer. “That’s why we’re reimagining the Salk Institute: recruiting mathematicians, computer scientists and engineers; adding emerging technologies; and building a Science and Technology Center to foster even more collaboration. Our goal is nothing less than revolutionizing science.”

Support a legacy where cures begin.

Featured Stories

Joan and Irwin Jacobs — A perfect matchJoan and Irwin Jacobs donate $100 million, a transformative gift, helping to launch Salk’s five-year, $500M philanthropic and scientific Campaign for the Future.

Joan and Irwin Jacobs — A perfect matchJoan and Irwin Jacobs donate $100 million, a transformative gift, helping to launch Salk’s five-year, $500M philanthropic and scientific Campaign for the Future. How computational biology is making us smarterThe Salk Institute is embracing the artificial intelligence revolution and inventing new ways to investigate life. Machine learning, deep learning and other AI techniques are being used to probe massive data sets, identify useful information and make accurate predictions.

How computational biology is making us smarterThe Salk Institute is embracing the artificial intelligence revolution and inventing new ways to investigate life. Machine learning, deep learning and other AI techniques are being used to probe massive data sets, identify useful information and make accurate predictions.  Dan Tierney – Biology Meets TechnologyDan Tierney is no stranger to big data. When Tierney founded a financial technology firm in the late 1990s, long before he joined the Salk Institute’s Board of Trustees, he was fascinated by emerging computational approaches that could crunch data and reveal hidden truths.

Dan Tierney – Biology Meets TechnologyDan Tierney is no stranger to big data. When Tierney founded a financial technology firm in the late 1990s, long before he joined the Salk Institute’s Board of Trustees, he was fascinated by emerging computational approaches that could crunch data and reveal hidden truths.

Natalie Luhtala — Shaping pancreatic cancer research to have real world applicationsThis year, Staff Scientist Natalie Luhtala celebrates her 10-year work anniversary at the Institute. In her current role, she’s directing a project examining an elusive signaling pathway to identify new targets for treating pancreatic cancer.

Natalie Luhtala — Shaping pancreatic cancer research to have real world applicationsThis year, Staff Scientist Natalie Luhtala celebrates her 10-year work anniversary at the Institute. In her current role, she’s directing a project examining an elusive signaling pathway to identify new targets for treating pancreatic cancer. Laura Newman — From mitochondria to craft beer and backLaura Newman, a Salk postdoctoral researcher, fell in love with science in a lab in college and switched from a medical program to pursuing biochemistry and developmental biology. At Salk, her main focus is on how cells can recognize when they’re sick or damaged in order to activate the immune system for cell survival.

Laura Newman — From mitochondria to craft beer and backLaura Newman, a Salk postdoctoral researcher, fell in love with science in a lab in college and switched from a medical program to pursuing biochemistry and developmental biology. At Salk, her main focus is on how cells can recognize when they’re sick or damaged in order to activate the immune system for cell survival.